Computed Context vs. Curated Context: Why ‘Best’ Doesn’t Mean What It Used To

🔍 The Signal – The Big Shift

For most of SEO’s history, “best” lists were curated. If you landed on Bankrate’s Best CD Rates or PCMag’s Best Laptops, you were visible. The writer or aggregator acted as the filter: deciding who qualified, what details to highlight, and what to leave out.

The critical point: presence equaled potential. Once you were on the list, you were in the game. The definition of “best” was subjective — and negotiable.

In the GEO/AI era, that safety net disappears. “Best” is no longer curated. It’s computed.

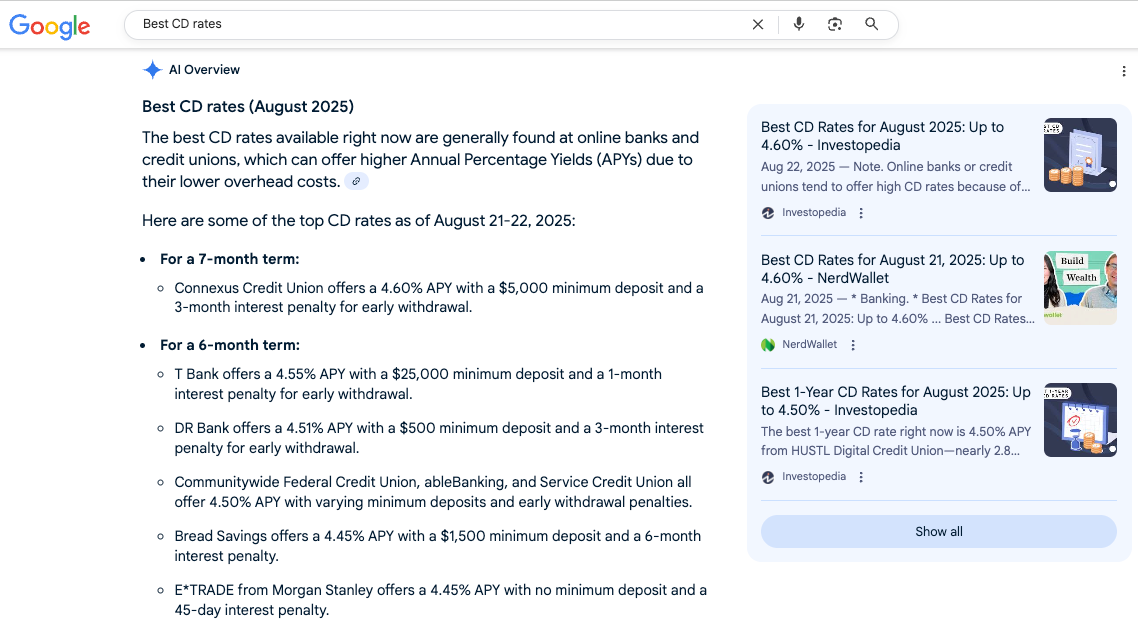

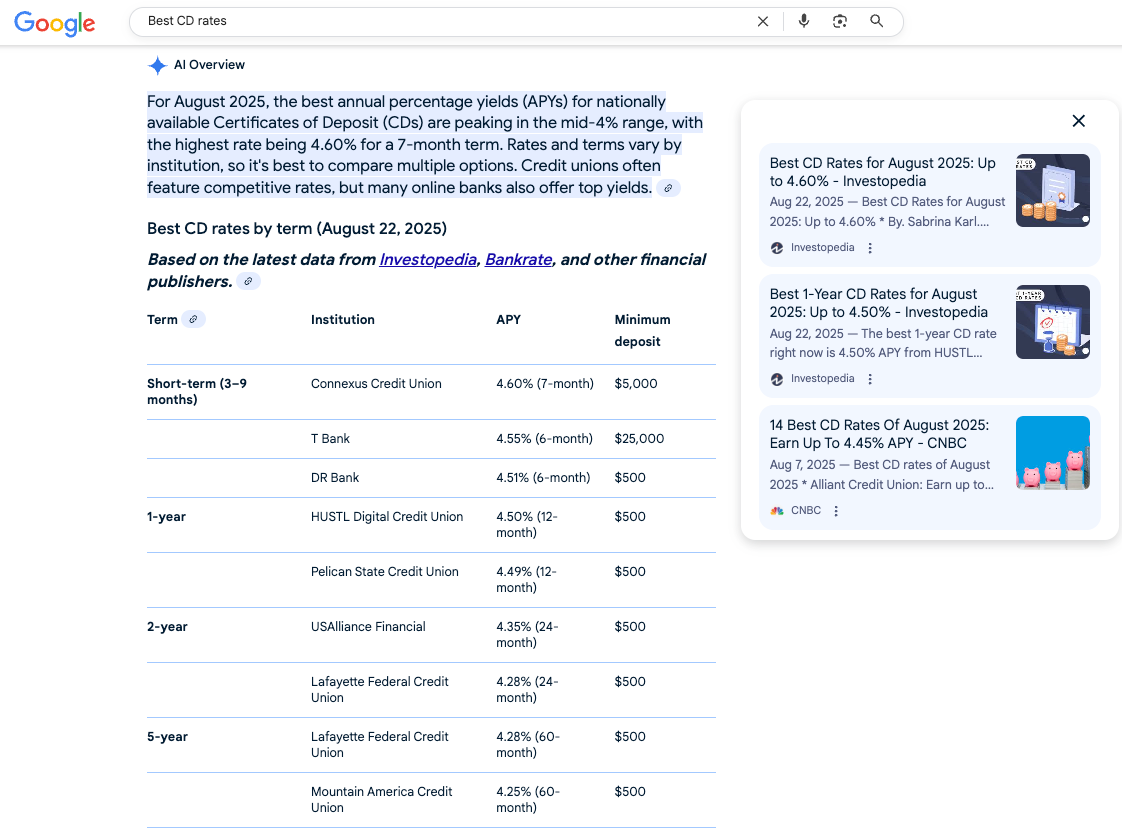

“Google’s AI answer: multiple CDs surfaced, all clustered at 4.40–4.60% APY — clear evidence of computed thresholds at work.”

There are only three links to the right to the aggregators. The question is, do I need to go to them? If any of these answers work for me, I'll search for the vendor. It would be great if Google linked to the banks it surfaced, but it did not. I suspect that it did not go to their sites to revalidate the information.

Even more interesting, when I went back the next day and did the exact search, I got a different set of results. This time, the results were listed in a table and updated for today. Again, no links to any of the individual banks or credit unions.

When someone asks Google or ChatGPT, “best CD rates?” the system doesn’t lean on human judgment. It must mechanically define “best” in real time and enforce that definition through filters.

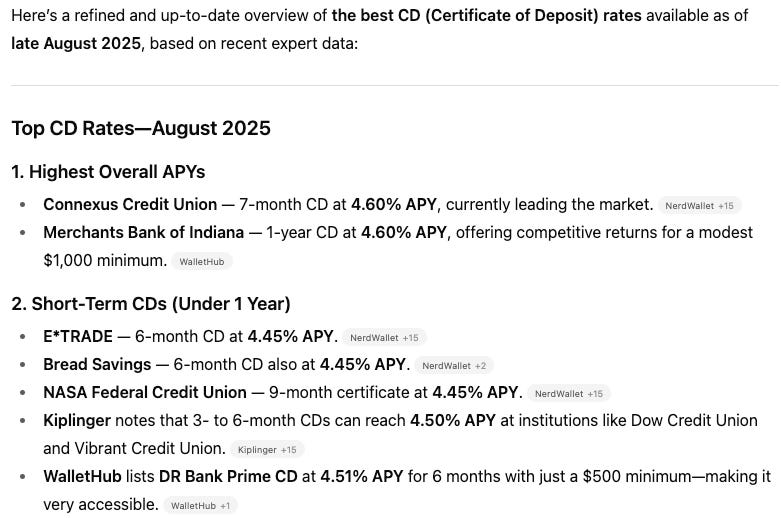

“ChatGPT’s recommendations mirrored Google’s, clustering around the same APY band — reinforcing that ‘best’ is being mechanically computed, not subjectively curated.”

This is where most brands stumble. They assume visibility equals eligibility. In reality, there are hidden gates you have to pass through before you can even appear in the answer.

⚙️ The Friction – Why Visibility Isn’t Enough

In traditional SEO, once you were indexed, you could fight your way into visibility. A weak offer might still sneak into a curated list because an editor wanted more variety or because your PR team had influence.

But AI-generated answers are unforgiving. They rely on structured logic, not subjective judgment. Even if your content is indexed, you won’t appear unless you clear all the required gates.

When I asked ChatGPT why it chose the CDs it did, it revealed six screening criteria. Notice how each one goes beyond “just the rate”:

Rate Competitiveness – Preference for APYs in the 4.40%–4.60% band, verified across multiple aggregators.

Liquidity & Term Variety – Included short-term, one-year, and no-penalty CDs to match different saver profiles.

Minimum Deposit Requirements – Favored low-barrier entry points ($500–$1,000) while flagging jumbo CDs separately.

Institution Type & Access – Prioritized banks and credit unions are broadly available to consumers, with clear membership requirements.

FDIC/NCUA Insurance – Excluded anything not explicitly insured.

Freshness & Consensus – Focused on late-August 2025 data, validated across multiple trackers to avoid stale promos.

How These Map to the Five Eligibility Gates

What struck me when I looked at ChatGPT’s six screening criteria is how neatly they collapse into a broader set of universal filters. In fact, they were the spark for a framework I call the Five Eligibility Gates — the hidden checkpoints that determine whether an offer gets included in an AI-generated “best of” answer.

Visibility Gate → Several of the criteria are about being findable and accessible — appearing in trusted sources, being nationally available, and ensuring the data is fresh. (Criteria 1, 4, 6)

Completeness Gate → Others deal with whether all the critical details are present — APY, deposit minimums, insurance, membership rules. Miss one, and you’re out. (Criteria 2, 3, 5)

Threshold Gate → Rate competitiveness worked as a hard floor. In this case, only APYs ≥ 4.40% qualified. (Criteria 1)

Competitiveness Gate → But hitting the floor wasn’t enough. Weaker offers (e.g., 4.30% CDs) were ignored when stronger ones were available. (Criteria 1, implied)

Consensus Gate → Finally, freshness and cross-checking across multiple trackers highlight the need for corroboration. If the data only appears in one place, it doesn’t count. (Criteria 6)

These five gates are the distilled version of what the AI was doing behind the scenes. In other words, “best” isn’t a matter of opinion anymore — it’s the result of clearing these eligibility checkpoints.

The Five Eligibility Gates

These gates determine whether you make it into an AI-generated “best of” answer. Miss one, and you’re invisible.

1. Visibility Gate – Are you in the data sources?

What it means: If your offer isn’t present in trusted sources (aggregators, directories, or structured on your own site), you don’t exist to the AI.

Example: A bank with a 4.6% CD that isn’t listed on Bankrate, NerdWallet, or its own FDIC page won’t be surfaced.

How to succeed:

Syndicate your data to the major aggregators in your category.

Structure your own site with schema (APY, reviews, specs) so the AI can read it directly.

Don’t assume one channel is enough — redundancy is protection.

2. Completeness Gate – Did you provide the required details?

What it means: AI can only use what it can read. Missing attributes = exclusion.

Example: A CD rate page without an explicit statement of FDIC insurance, or a hotel page without cancellation policy details, if that is part of the selection criteria, will be skipped.

How to succeed:

Build a checklist of required attributes for your category (rates, terms, insurance, star ratings, specs, warranty, etc.). If in doubt, ask the AI engine to tell you the criteria it would use for each prompt.

Use structured data markup to make attributes machine-readable.

Run regular audits to catch missing or outdated information.

3. Threshold Gate – Do you meet the baseline criteria?

What it means: Offers below the cut line are filtered out.

Example: In my CD test, ChatGPT only included APYs between 4.40%–4.60%. A 4.30% CD wasn’t even considered because, without more qualification of my original request criteria, it used APY as the key criterion of best.

How to succeed:

Design offers to beat the floor. If you can’t compete on raw metrics (price, APY, reviews), reposition with unique qualifiers (more extended warranty, no penalty, niche benefits).

Don’t chase “best” if you can’t meet thresholds for how AI will define best — optimize for “alternative” or “specialized.”

4. Competitiveness Gate – Do you stand out against peers?

What it means: Meeting the minimum isn’t enough. If your offer is weaker than alternatives, it will be down-ranked or skipped.

A 4.40% CD appears weak when compared to multiple 4.60% CDs, especially without an explicit statement of FDIC insurance, shorter period, or higher deposit level requirement. Similarly, a SaaS tool with 25 integrations appears inferior to competitors with 150.

How to succeed:

Explicitly highlight your differentiators (lower fees, faster delivery, exclusive features).

Frame them in comparative terms that the AI can parse (“10% faster than industry average”).

Make your edge quantifiable and clear.

5. Consensus Gate – Is your information validated across sources?

What it means: One-off data looks like noise. Multiple independent sources give credibility.

Example: A “flash promo” rate listed on one obscure site won’t survive. But if Bankrate, NerdWallet, and your own site show the same number, it feels real.

How to succeed:

Ensure data consistency across all listings, PR, and feeds.

Update frequently — stale data gets filtered out.

Encourage independent third-party coverage that mirrors your claims.

💥 The Realization – what it means, and what to do next

The CD rate example is just the tip of the iceberg. The same gates are at work in every category:

Banking & Finance: Mortgage rates, credit cards, savings accounts — missing fees, membership rules, or insurance details? You’re out.

Travel & Hospitality: Hotels and flights — lack of cancellation terms or inconsistent pricing knocks you out.

Healthcare: Doctors and clinics — no credentials or insurance info, no inclusion.

E-Commerce: Products — missing specs, warranty, or shipping terms means invisibility.

Education & SaaS: Courses and software — without reviews, integrations, or clear features, you’ll never surface as “best.”

Closing Thought – From Curated to Computed

The asymmetry is stark:

Curated Context (SEO Era): Get on the list and you’re in. Editors smoothed over gaps, and “best” was subjective.

Computed Context (GEO Era): No smoothing, no exceptions. Miss a gate and you’re excluded. “Best” is a math problem.

For businesses, this means a new mandate:

Be visible in the right sources.

Publish complete, structured, up-to-date data.

Meet or beat thresholds.

Highlight comparative advantages.

Maintain consistency across channels.

Bottom line: In the AI era, “best” or any other “qualifier” has moved from subjective curation to objective computation. The winners will be those who stop optimizing just for visibility and start optimizing for eligibility.

📦 Breakout Box: The Economics of “Best” — Search Theory Meets AI Filters

Writing this article triggered an alignment with my ongoing post-grad research on Search Theory.

Search Theory (Stigler, 1961):

In economics, search has a cost. Buyers weigh how much effort to spend finding information versus settling for what’s visible.

Curated SEO lists reduced search costs by outsourcing the work to editors and aggregators.

What Changes in AI/GEO:

AI collapses search costs to nearly zero for the consumer by mechanically applying filters (rates, attributes, consensus).

But those costs don’t vanish — they shift upstream. Businesses now bear the burden of supplying structured, validated, and competitive data or risk exclusion.

Information Asymmetry:

Traditional SEO allowed weak offers to sneak in because editors could overlook missing details or SEO teams could game visibility.

AI reduces asymmetry by requiring explicit disclosure and cross-source validation.

But a new asymmetry emerges: brands that understand the hidden gates vs. those that still think visibility alone is enough.

The Big Takeaway:

The economics of “best” have flipped. AI answers move search costs from the demand side (the consumer hunting for information) to the supply side (the business proving eligibility).